The software required for upgrading the oracle Grid Infrastructure and RAC database patchset upgrade from 11.2.0.1.0 to 11.2.0.3.10 are as follows,

Sr. No.

|

HOME

|

Description

|

Software Zip File

|

1.

|

11.2.0.1 RDBMS_HOME

|

RACcheck Readiness Assessment Tool(Support

Note 1457357.1)

|

orachk.zip

|

2.

|

GRID

& RDBMS HOME

|

Latest Opatch utility

|

p6880880_112000_Linux-x86-64.zip

|

3.

|

11.2.0.1 GRID_HOME

|

Grid Infrastructure One-off Patches for

Source Cluster Home

(Recommended

by orachk utility)

|

p9413827_112010_Linux-x86-64.zip

p12652740_112010_Linux-x86-64.zip

|

4.

|

11.2.0.3 GRID_HOME

|

11.2.0.3.0 Grid Infrastructure software

|

p10404530_112030_Linux-x86-64_3of7.zip

|

Grid Infrastructure 11.2.0.3.10

patchset update(includes DB PSU 11.2.0.3.10)

|

p18139678_112030_Linux-x86-64.zip

|

||

5.

|

11.2.0.3

RDBMS_HOME

|

11.2.0.3.0 database software

|

p10404530_112030_Linux-x86-64_1of7.zip

p10404530_112030_Linux-x86-64_2of7.zip

|

1. Run the Oracle RACcheck upgrade readiness

assessment tool.

(a) Download the latest RACcheck

configuration audit tool from the oracle support note 1457357.1.This tool should be run as RDBMS owner.

(b) unzip the orchk.zip file

unzip orchk.zip

(c) Change the permission of the unzipped directory to 755

unzip 755 orachk(d) Execute orachk in pre-upgrade mode as RDBMS user and follow the on-screen prompts

./orachk -u -o pr(e) Review the html file generated by the orachk readiness assessment tool.

2. Run the CVU Pre-Upgrade check tool

(a) Execute runcluvfy.sh in unzipped grid

installation software location as grid user to confirm whether the environment

is suitable for upgrading

./runcluvfy.sh stage -pre crsinst -upgrade -n ol6-112-rac1.localdomain,ol6-112-rac2.localdomain -rolling -src_crshome /u01/app/11.2.0/grid -dest_crshome /u02/app/11.2.0.3/grid -dest_version 11.2.0.3.0 -fixup -fixupdir /tmp –verbose(b) Check the generated logs for any component with failed status.If any resolve the issue for the failed status and make it passed in the next run of the runcluvfy.sh

3.Unzip the latest opatch utility

(a) unzip the p6880880_112000_Linux-x86-64.zip

unzip p6880880_112000_Linux-x86-64.zip

(b) move the old OPatch directory in the GRID_HOME as grid user

mv $GRID_HOME/OPatch $GRID_HOME/OPatch_old

(c) move the old

OPatch directory in the RDBMS_HOME as rdbms user

mv $RDBMS_HOME/OPatch $RDBMS_HOME/OPatch_old

(d) Copy the unzipped OPatch to the GRID_HOME as

grid user

cp -r $STAGE_HOME/OPatch $GRID_HOME(e) Copy the unzipped OPatch the RDBMS_HOME as rdbms user

cp –r $STAGE_HOME/OPatch $RDBMS_HOME

4. Applying one-off patch 9413827 in 11.2.0.1 grid home and in 11.2.0.1 rdbms home

(a) Use srvctl stop home as rdbms user, to stop all the running instances

in node 1

srvctl stop home -o $RDBMS_HOME -s ol6_rac1_status_srvctl.log -n ol6-112-rac1

(b) Run as root user in node 1

# $GRID_HOME/crs/install/rootcrs.pl –unlock

(c) Run as rdbms user

cd ../9413827/custom/server/9413827/custom/scripts/ ./prepatch.sh -dbhome $RDBMS_HOME

(d) Run as grid user

opatch napply -local -oh $GRID_HOME -id 9413827

(e) Run as rdbms user

cd ../9413827/custom/server opatch napply -local -oh $RDBMS_HOME -id 9413827

(f) run as root user

#chmod +w $GRID_HOME/log/nodename/agent #chmod +w $GRID_HOME/log/nodename/agent/crsd

(g) run as rdbms user

cd ../9413827/custom/server/9413827/custom/scripts/ ./postpatch.sh -dbhome $RDBMS_HOME

(h) run as root user

# $GRID_HOME/crs/install/rootcrs.pl –patch

(i) check the status of the interim patch status as grid user

export PATH=$PATH:$GRID_HOME/OPatch opatch lsinventory -detail -oh $GRID_HOME opatch lsinventory -detail -oh $RDBMS_HOME

5. Applying one-off patch 12652740 in 11.2.0.1 grid home and in 11.2.0.1 oracle

home

(a) Run as rdbms

user in node1

$RDBMS_HOME/bin/srvctl stop home -o $RDBMS_HOME ol6_rac1_status_srvctl.log -n ol6-112-rac1

(b) Run as root user

in node 1

#$GRID_HOME/crs/install/rootcrs.pl –unlock

(c) Run as grid user

cd $UNZIPPED_PATH_LOCATION/12652740 $GRID_HOME/OPatch/opatch napply -oh $GRID_HOME -local

(d) Run as rdbms user

cd $UNZIPPED_PATH_LOCATION/12652740 $RDBMS_HOME/OPatch/opatch napply -oh $RDBMS_HOME -local

(e) Run as root user

# $GRID_HOME/rdbms/install/rootadd_rdbms.sh # $GRID_HOME/crs/install/rootcrs.pl –patch

(f) check the status of the interim patch status as grid user

export PATH=$PATH:$GRID_HOME/OPatch opatch lsinventory -detail -oh $GRID_HOME opatch lsinventory -detail -oh $RDBMS_HOME

(g) perform

the steps (a)-(f) in node 2

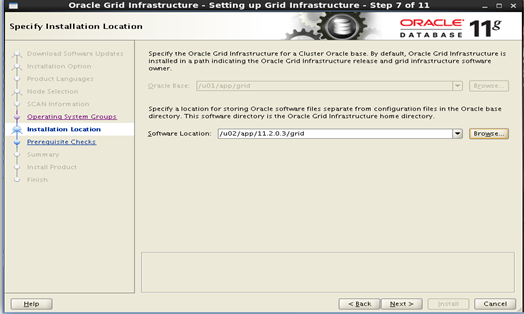

6. Upgrade the grid

infrastructure from 11.2.0.1 to 11.2.0.3 using the unzipped software for

11.2.0.3

Start the installation by using ./runInstaller

(b) Run the

rootupgrade.sh script as root user in node1 and node2 respectively

[root@ol6-112-rac1 grid_1]# ./rootupgrade.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/11.2.0/grid/app/11.2.0.3/grid_1

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/app/11.2.0.3/grid_1/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

There is no ASM configured.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'ol6-112-rac1'

CRS-2673: Attempting to stop 'ora.crsd' on 'ol6-112-rac1'

CRS-2790: Starting shutdown of Cluster Ready Services-managed resources on 'ol6-112-rac1'

CRS-2673: Attempting to stop 'ora.LISTENER_SCAN3.lsnr' on 'ol6-112-rac1'

CRS-2673: Attempting to stop 'ora.LISTENER_SCAN2.lsnr' on 'ol6-112-rac1'

CRS-2673: Attempting to stop 'ora.LISTENER.lsnr' on 'ol6-112-rac1'

CRS-2677: Stop of 'ora.LISTENER_SCAN2.lsnr' on 'ol6-112-rac1' succeeded

CRS-2673: Attempting to stop 'ora.scan2.vip' on 'ol6-112-rac1'

CRS-2677: Stop of 'ora.LISTENER.lsnr' on 'ol6-112-rac1' succeeded

CRS-2673: Attempting to stop 'ora.ol6-112-rac1.vip' on 'ol6-112-rac1'

CRS-2677: Stop of 'ora.LISTENER_SCAN3.lsnr' on 'ol6-112-rac1' succeeded

CRS-2673: Attempting to stop 'ora.scan3.vip' on 'ol6-112-rac1'

CRS-2677: Stop of 'ora.scan2.vip' on 'ol6-112-rac1' succeeded

CRS-2672: Attempting to start 'ora.scan2.vip' on 'ol6-112-rac2'

CRS-2677: Stop of 'ora.ol6-112-rac1.vip' on 'ol6-112-rac1' succeeded

CRS-2672: Attempting to start 'ora.ol6-112-rac1.vip' on 'ol6-112-rac2'

CRS-2677: Stop of 'ora.scan3.vip' on 'ol6-112-rac1' succeeded

CRS-2672: Attempting to start 'ora.scan3.vip' on 'ol6-112-rac2'

CRS-2676: Start of 'ora.scan2.vip' on 'ol6-112-rac2' succeeded

CRS-2672: Attempting to start 'ora.LISTENER_SCAN2.lsnr' on 'ol6-112-rac2'

CRS-2676: Start of 'ora.ol6-112-rac1.vip' on 'ol6-112-rac2' succeeded

CRS-2676: Start of 'ora.scan3.vip' on 'ol6-112-rac2' succeeded

CRS-2672: Attempting to start 'ora.LISTENER_SCAN3.lsnr' on 'ol6-112-rac2'

CRS-2676: Start of 'ora.LISTENER_SCAN2.lsnr' on 'ol6-112-rac2' succeeded

CRS-2676: Start of 'ora.LISTENER_SCAN3.lsnr' on 'ol6-112-rac2' succeeded

CRS-2673: Attempting to stop 'ora.eons' on 'ol6-112-rac1'

CRS-2673: Attempting to stop 'ora.ons' on 'ol6-112-rac1'

CRS-2677: Stop of 'ora.ons' on 'ol6-112-rac1' succeeded

CRS-2673: Attempting to stop 'ora.net1.network' on 'ol6-112-rac1'

CRS-2677: Stop of 'ora.net1.network' on 'ol6-112-rac1' succeeded

CRS-2677: Stop of 'ora.eons' on 'ol6-112-rac1' succeeded

CRS-2792: Shutdown of Cluster Ready Services-managed resources on 'ol6-112-rac1' has completed

CRS-2677: Stop of 'ora.crsd' on 'ol6-112-rac1' succeeded

CRS-2673: Attempting to stop 'ora.gpnpd' on 'ol6-112-rac1'

CRS-2673: Attempting to stop 'ora.cssdmonitor' on 'ol6-112-rac1'

CRS-2673: Attempting to stop 'ora.ctssd' on 'ol6-112-rac1'

CRS-2673: Attempting to stop 'ora.evmd' on 'ol6-112-rac1'

CRS-2673: Attempting to stop 'ora.mdnsd' on 'ol6-112-rac1'

CRS-2677: Stop of 'ora.cssdmonitor' on 'ol6-112-rac1' succeeded

CRS-2677: Stop of 'ora.gpnpd' on 'ol6-112-rac1' succeeded

CRS-2677: Stop of 'ora.evmd' on 'ol6-112-rac1' succeeded

CRS-2677: Stop of 'ora.mdnsd' on 'ol6-112-rac1' succeeded

CRS-2677: Stop of 'ora.ctssd' on 'ol6-112-rac1' succeeded

CRS-2673: Attempting to stop 'ora.cssd' on 'ol6-112-rac1'

CRS-2677: Stop of 'ora.cssd' on 'ol6-112-rac1' succeeded

CRS-2673: Attempting to stop 'ora.diskmon' on 'ol6-112-rac1'

CRS-2673: Attempting to stop 'ora.gipcd' on 'ol6-112-rac1'

CRS-2677: Stop of 'ora.gipcd' on 'ol6-112-rac1' succeeded

CRS-2677: Stop of 'ora.diskmon' on 'ol6-112-rac1' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'ol6-112-rac1' has completed

CRS-4133: Oracle High Availability Services has been stopped.

OLR initialization - successful

Replacing Clusterware entries in upstart

clscfg: EXISTING configuration version 5 detected.

clscfg: version 5 is 11g Release 2.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

Preparing packages for installation...

cvuqdisk-1.0.9-1

figure Oracle Grid Infrastructure for a Cluster ... succeeded

crsctl query crs releaseversion crsctl query crs activeversion crsctl query crs softwareversion

7.Apply the patchset

update 11.2.0.3.10 to the grid

infrastructure 11.2.0.3.0

(a) Complete the

above step 3, to copy the latest opatch version in the new grid infrastructure

home.

(b)Unzip the 11.2.3.10 in an empty directory

unzip p18139678_112030_Linux-x86-64.zip -d /u03/stage_grid/Upgrade/new

(c) Create OCM response file,by default it creates where we execute emocmrsp file, So it creates ocm.rsp in $GRID_HOME/OPatch/ocm/bin

cd $GRID_HOME/OPatch/ocm/bin ./emocmrsp

export PATH=$PATH:$GRID_HOME/OPatch which opatch cd /u03/stage_grid/Upgrade/new opatch auto -oh $GRID_HOME -ocmrf $GRID_HOME/OPatch/ocm/bin/ocm.rsp

(e)The logs of opatch auto command

Executing /u01/app/11.2.0/grid/app/11.2.0.3/grid_1/perl/bin/perl /u01/app/11.2.0/grid/app/11.2.0.3/grid_1/OPatch/crs/patch11203.pl -patchdir /u03/stage_grid/Upgrade -patchn new -oh /u01/app/11.2.0/grid/app/11.2.0.3/grid_1 -ocmrf /u01/app/11.2.0/grid/app/11.2.0.3/grid_1/OPatch/ocm/bin/ocm.rsp -paramfile /u01/app/11.2.0/grid/app/11.2.0.3/grid_1/crs/install/crsconfig_params This is the main log file: /u01/app/11.2.0/grid/app/11.2.0.3/grid_1/cfgtoollogs/opatchauto2014-05-10_13-37-05.log This file will show your detected configuration and all the steps that opatchauto attempted to do on your system: /u01/app/11.2.0/grid/app/11.2.0.3/grid_1/cfgtoollogs/opatchauto2014-05-10_13-37-05.report.log 2014-05-10 13:37:05: Starting Clusterware Patch Setup Using configuration parameter file: /u01/app/11.2.0/grid/app/11.2.0.3/grid_1/crs/install/crsconfig_params Enter 'yes' if you have unzipped this patch to an empty directory to proceed (yes/no):yes Enter 'yes' if you have unzipped this patch to an empty directory to proceed (yes/no):yes Stopping CRS... Stopped CRS successfully patch /u03/stage_grid/Upgrade/new/18031683 apply successful for home /u01/app/11.2.0/grid/app/11.2.0.3/grid_1 Starting CRS... CRS-4123: Oracle High Availability Services has been started. opatch auto succeeded.

(f) Run as grid user

opatch lsinventory

8.Install 11.2.0.3 RDBMS

Software

(a)Install the software using runInstaller

(b) Run the root.sh

script file as root user in node1 and node2 respectively

[root@ol6-112-rac1 db_2]# ./root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/orcl/oracle/product/11.2.0.3/db_2

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Finished product-specific root actions.

9.Apply the patchset update 11.2.0.3.10 to the RDBMS binaries

(a). Make an entry in the oratab file with the new rdbms home location like

dummy:/u01/app/orcl/oracle/product/11.2.0.3/db_2:N and the source the "dummy" database using oraenv

[oracle@ol6-112-rac1 ~]$ . oraenv ORACLE_SID = [dummy] ? dummy The Oracle base has been set to /u01/app/orcl/oracle [oracle@ol6-112-rac1 ~]$

(b) Complete the above step 3, to copy the latest opatch version to the new rdbms home

(c)Export the PATH environment variable to include the opatch binary file path

export PATH=$PATH:$ORACLE_HOME/OPatch/bin

(d) Change the directory to 18031683 present in the unzipped directory of the patch p18139678_112030_Linux-x86-64.zip. and apply the patchset update 11.2.0.3.10 for new rdbms binaries. It applies for the remode node as well.

[oracle@ol6-112-rac1 18031683]$ pwd /u03/stage_oracle/18031683/ [oracle@ol6-112-rac1 18031683]$ opatch apply Oracle Interim Patch Installer version 11.2.0.3.6 Copyright (c) 2013, Oracle Corporation. All rights reserved. Oracle Home : /u01/app/orcl/oracle/product/11.2.0.3/db_2 Central Inventory : /u01/app/oraInventory from : /u01/app/orcl/oracle/product/11.2.0.3/db_2/oraInst.loc OPatch version : 11.2.0.3.6 OUI version : 11.2.0.3.0 Log file location : /u01/app/orcl/oracle/product/11.2.0.3/db_2/cfgtoollogs/opatch/opatch2014-05-10_15-26-51PM_1.log Verifying environment and performing prerequisite checks... OPatch continues with these patches: 13343438 13696216 13923374 14275605 14727310 16056266 16619892 16902043 17540582 18031683

(e) Verify the patchset update has been applied or not

opatch lsinventory

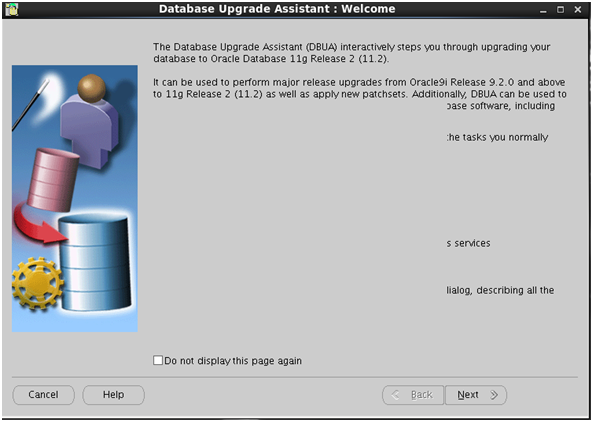

10.Start dbua to upgrade the 11.2.0.1 database to 11.2.0.3.10

11.Detach the 11.2.0.1.0 grid

home and rdbms home

(a) Detach the 11.2.0.1.0 grid home in the node1 and node2

/u01/app/11.2.0/grid/oui/bin/runInstaller -silent -detachHome -invPtrLoc /etc/oraInst.loc ORACLE_HOME="/u01/app/11.2.0/grid" ORACLE_HOME_NAME="grid_home1" CLUSTER_NODES="{ol6-112-rac1,ol6-112-rac2}" -local

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 4067 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /u01/app/oraInventory

'DetachHome' was successful.

(b) Deatch 11.2.0.1.0 RDBMS home in node1 and node2

/u01/app/orcl/oracle/product/11.2.0/db_1/oui/bin/runInstaller -silent -detachHome -invPtrLoc /etc/oraInst.loc ORACLE_HOME="/u01/app/orcl/oracle/product/11.2.0/db_1" ORACLE_HOME_NAME="OraDb11g_home1" CLUSTER_NODES="{ol6-112-rac1,ol6-112-rac2}" –local

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 3885 MB Passed

The inventory pointer is located at /etc/oraInst.loc

The inventory is located at /u01/app/oraInventory

'DetachHome' was successful.

(c)Check the 11.2.0.1 grid and oracle home is not in the inventory

opatch lsinventory –oh old_grid_home opatch lsinventory –oh old_rdbms_home

No comments:

Post a Comment